Today we are going to see how we can scrape Hacker News posts using Python and BeautifulSoup in a simple and elegant manner.

The aim of this article is to get you started on a real-world problem solving while keeping it super simple so you get familiar and get practical results as fast as possible.

So the first thing we need is to make sure we have Python 3 installed. If not, you can just get Python 3 and get it installed before you proceed.

Then you can install beautiful soup with...

pip3 install beautifulsoup4

We will also need the libraries requests, lxml and soupsieve to fetch data, break it down to XML, and to use CSS selectors. Install them using...

pip3 install requests soupsieve lxmlOnce installed open an editor and type in...

# -*- coding: utf-8 -*-

from bs4 import BeautifulSoup

import requestsNow let's go to the Hacker News home page and inspect the data we can get.

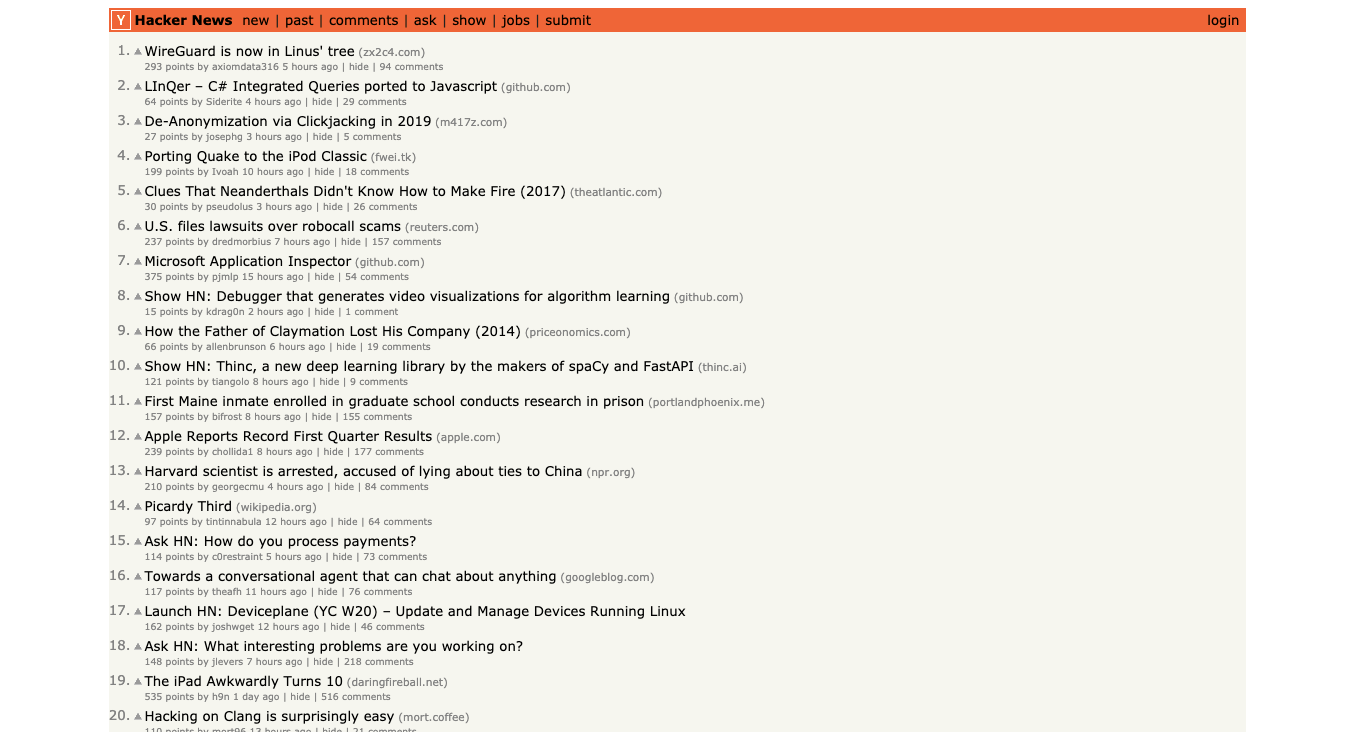

This is how it looks:

Back to our code now... let's try and get this data by pretending we are a browser like this...

# -*- coding: utf-8 -*-

from bs4 import BeautifulSoup

import requests

headers = {'User-Agent':'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_2) AppleWebKit/601.3.9 (KHTML, like Gecko) Version/9.0.2 Safari/601.3.9'}

url='https://news.ycombinator.com/'

response=requests.get(url,headers=headers)

print(response)Save this as HN_bs.py.

If you run it...

python3 HN_bs.pyYou will see the whole HTML page

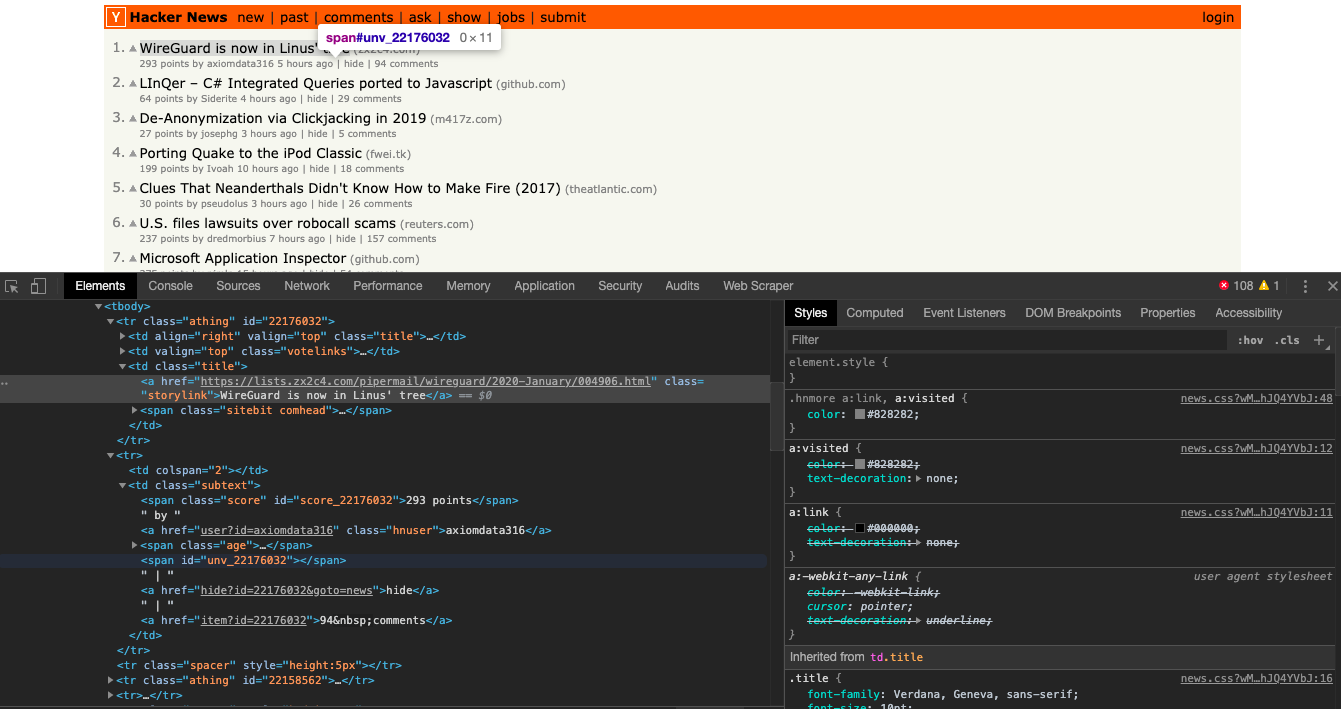

Now let's use CSS selectors to get to the data we want... To do that let's go back to Chrome and open the inspect tool. We now need to get to all the posts... We notice that the data is arranged in a table and each post uses 3 rows to show the data.

We have to just run through the rows to get data like this...

# -*- coding: utf-8 -*-

from bs4 import BeautifulSoup

import requests

headers = {'User-Agent':'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_2) AppleWebKit/601.3.9 (KHTML, like Gecko) Version/9.0.2 Safari/601.3.9'}

url='https://news.ycombinator.com/'

response=requests.get(url,headers=headers)

soup=BeautifulSoup(response.content,'lxml')

for item in soup.find_all('tr'):

try:

print(item)

except Exception as e:

raise e

print('')This prints all the content in each of the rows. We now need to be smart about the kind of content we want. The first row contains the title. But we have to make sure we dont ask for content from the other rows so we put in a check like the one below. The storylink class contains the title of the post so the code below makes sure the class exists in THIS row before asking for the text of it...

# -*- coding: utf-8 -*-

from bs4 import BeautifulSoup

import requests

headers = {'User-Agent':'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_2) AppleWebKit/601.3.9 (KHTML, like Gecko) Version/9.0.2 Safari/601.3.9'}

url='https://news.ycombinator.com/'

response=requests.get(url,headers=headers)

soup=BeautifulSoup(response.content,'lxml')

for item in soup.find_all('tr'):

try:

#print(item)

if item.select('.storylink'):

print(item.select('.storylink')[0].get_text())

except Exception as e:

raise e

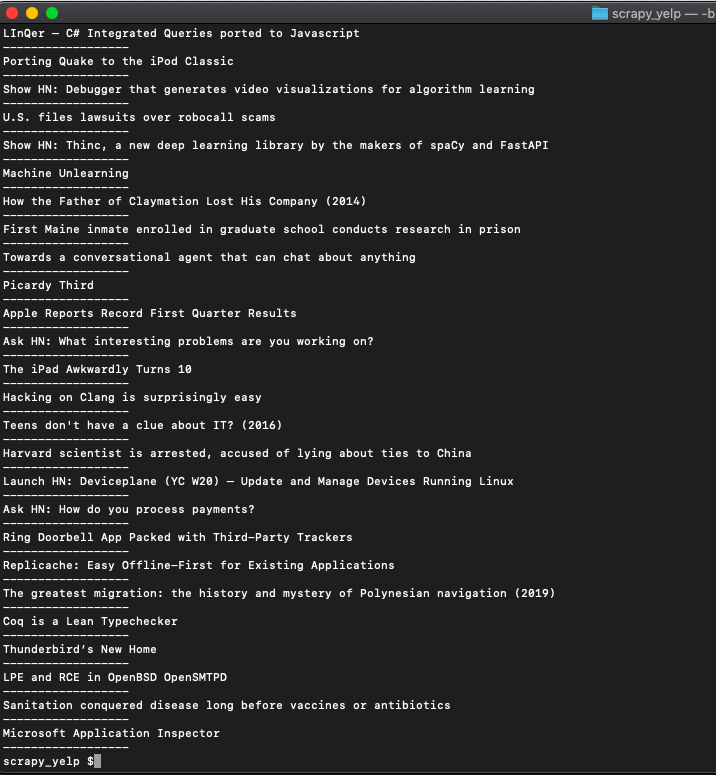

print('')If you run it. This will print the titles...

Bingo!! we got the post titles...

Now with the same process, we get the other data like a number of comments, HN user name, link, etc like below.

# -*- coding: utf-8 -*-

from bs4 import BeautifulSoup

import requests

headers = {'User-Agent':'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_2) AppleWebKit/601.3.9 (KHTML, like Gecko) Version/9.0.2 Safari/601.3.9'}

url='https://news.ycombinator.com/'

response=requests.get(url,headers=headers)

soup=BeautifulSoup(response.content,'lxml')

for item in soup.find_all('tr'):

try:

#print(item)

if item.select('.storylink'):

print(item.select('.storylink')[0].get_text())

print(item.select('.storylink')[0]['href'])

if item.select('.hnuser'):

print(item.select('.hnuser')[0].get_text())

print(item.select('.score')[0].get_text())

print(item.find_all('a')[3].get_text())

print('------------------')

except Exception as e:

raise e

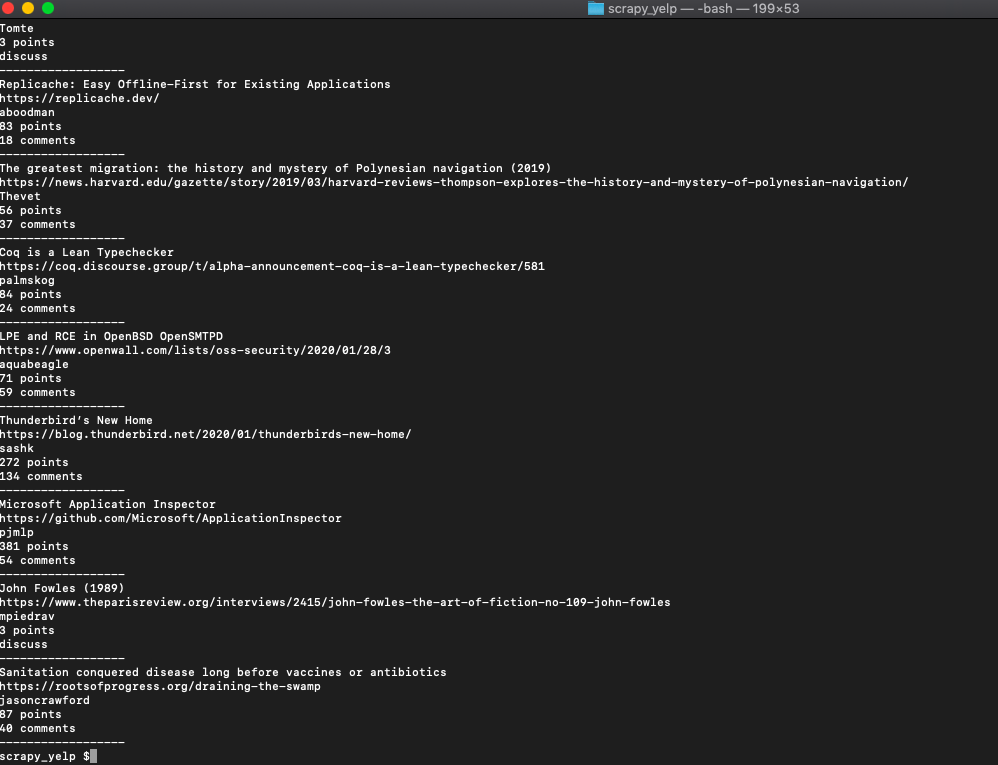

print('')Notice that for getting the comments we try and find the fourth link inside the block that contains the hnuser details like so

item.find_all('a')[3].get_text()

That when run, should print everything we need from each post like this.

We even added a separator to show where each post ends. You can now pass this data into an array or save it to CSV and do whatever you want.

If you want to use this in production and want to scale to thousands of links then you will find that you will get IP blocked easily. In this scenario using a rotating proxy service to rotate IPs is almost a must.

Otherwise, you tend to get IP blocked a lot by automatic location, usage, and bot detection algorithms.

Our rotating proxy server Proxies API provides a simple API that can solve all IP Blocking problems instantly.

- With millions of high speed rotating proxies located all over the world,

- With our automatic IP rotation

- With our automatic User-Agent-String rotation (which simulates requests from different, valid web browsers and web browser versions)

- With our automatic CAPTCHA solving technology,

Hundreds of our customers have successfully solved the headache of IP blocks with a simple API.

The whole thing can be accessed by a simple API like below in any programming language.

curl "http://api.proxiesapi.com/?key=API_KEY&url=https://example.com"We have a running offer of 1000 API calls completely free. Register and get your free API Key here.