Suppose you go to an interview for a developer who has to create web crawlers? suppose you show them how you learned about the job opening because you had made a web crawler to crawl jobs about web crawling? So meta! the job should be yours.

Today lets look at scraping Job listings data using Beautiful soup and the requests module in python.

Here is a simple script that does that. BeautifulSoup will help us extract information and we will retrieve crucial pieces of information.

To start with, this is the boilerplate code we need to get the indeed.com search results page and set up BeautifulSoup to help us use CSS selectors to query the page for meaningful data.

# -*- coding: utf-8 -*-

from bs4 import BeautifulSoup

import requests

headers = {'User-Agent':'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_2) AppleWebKit/601.3.9 (KHTML, like Gecko) Version/9.0.2 Safari/601.3.9'}

url = 'https://www.indeed.com/jobs?q=web crawler'

response=requests.get(url,headers=headers)

#print(response.content)

soup=BeautifulSoup(response.content,'lxml')We are also p[assing the user agent headers to simulate a browser call so we dont get blocked.

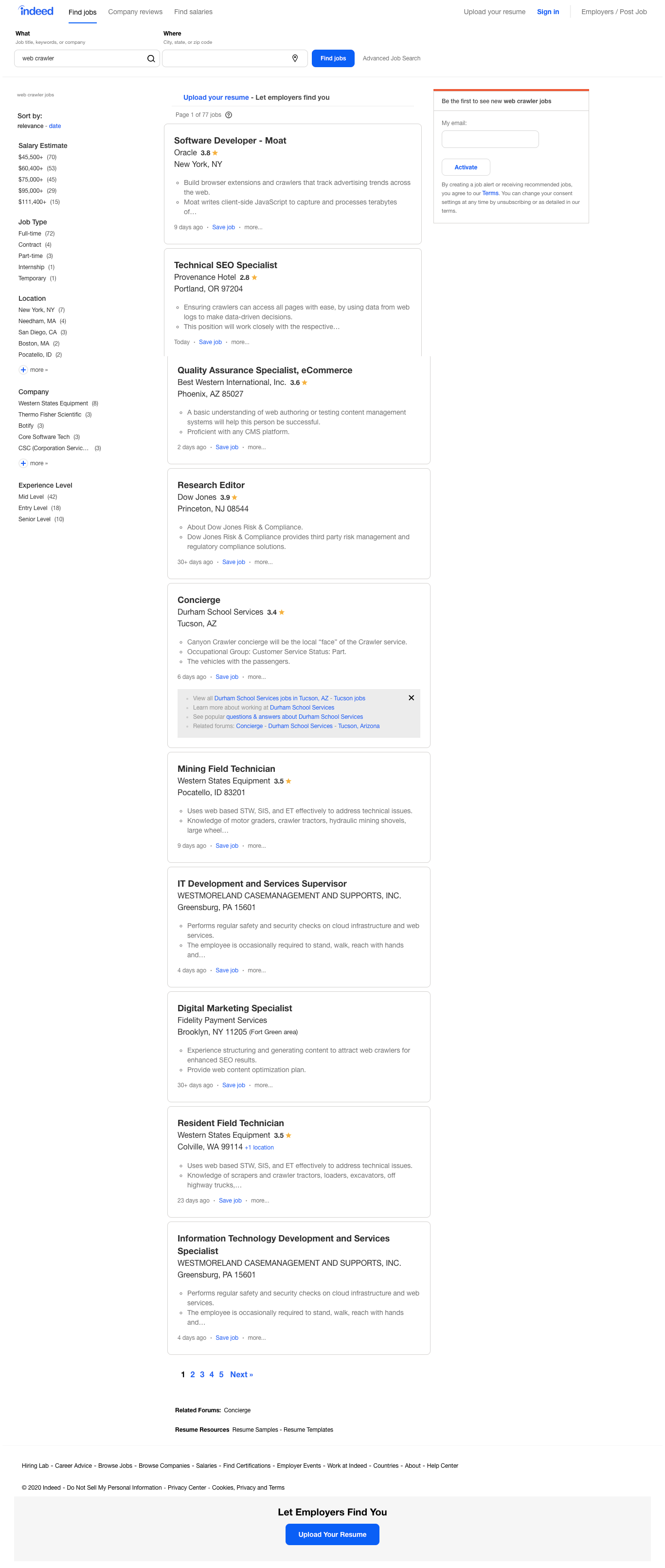

Now let's analyze the Indeed pages search results. This is how it looks.

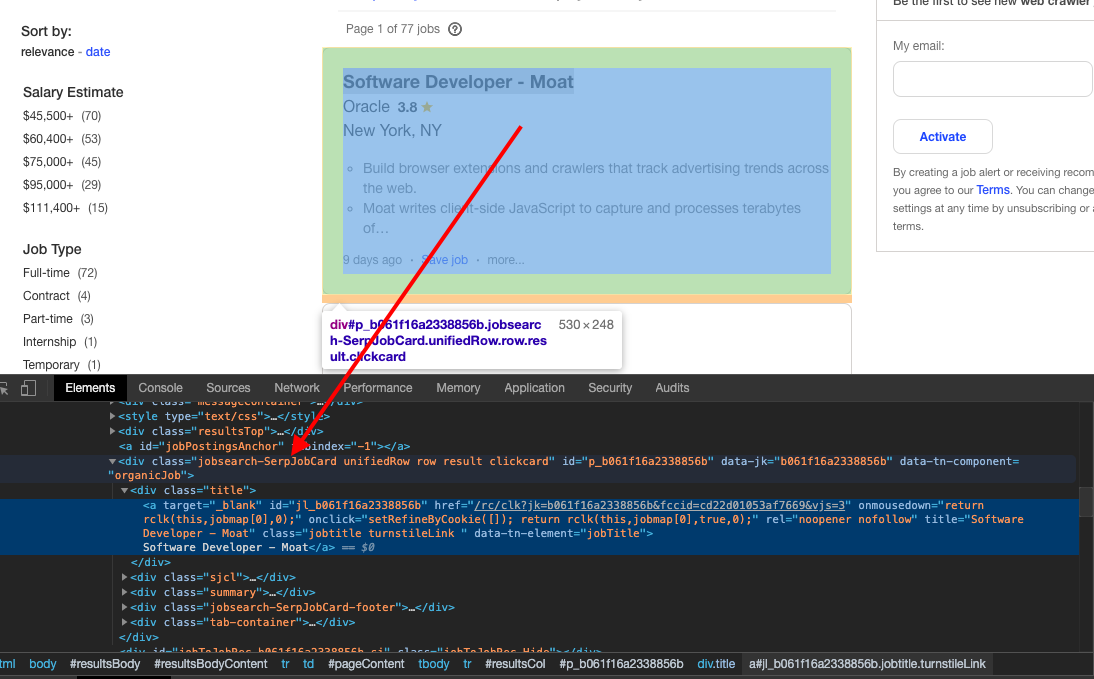

And when we inspect the page we find that each of the items HTML is encapsulated in a tag with the class jobsearch-SerpJobCard.

We could just use this to break the HTML document into these cards which contain individual item information like this:

# -*- coding: utf-8 -*-

from bs4 import BeautifulSoup

import requests

headers = {'User-Agent':'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_2) AppleWebKit/601.3.9 (KHTML, like Gecko) Version/9.0.2 Safari/601.3.9'}

url = 'https://www.indeed.com/jobs?q=web crawler'

response=requests.get(url,headers=headers)

#print(response.content)

soup=BeautifulSoup(response.content,'lxml')

for item in soup.select('.jobsearch-SerpJobCard'):

try:

print('----------------------------------------')

print(item)

except Exception as e:

#raise e

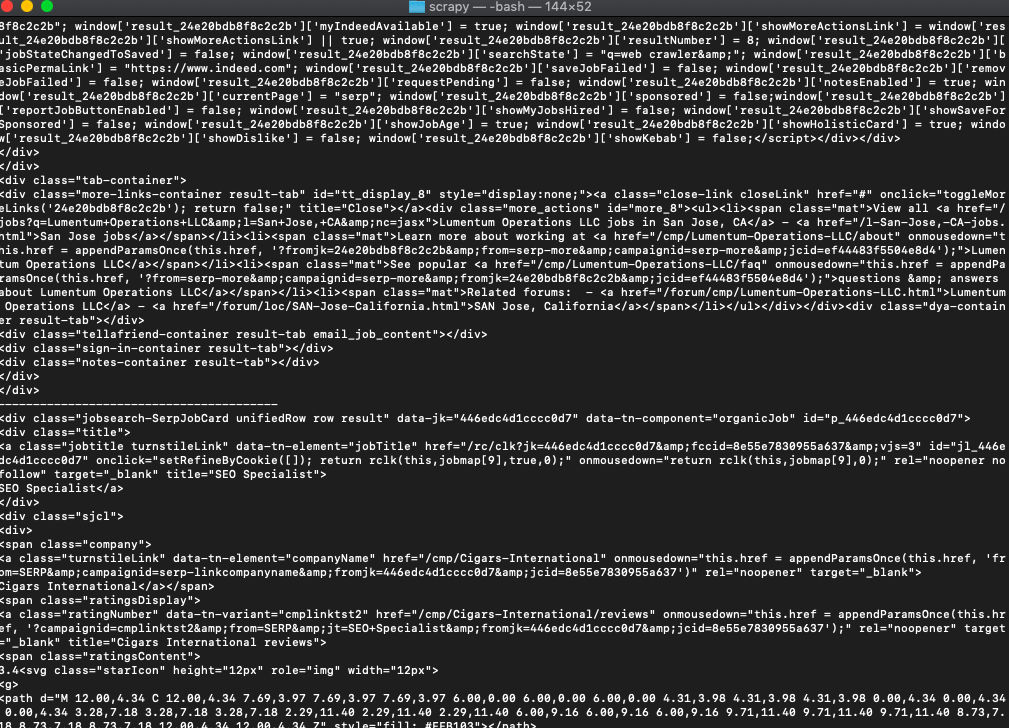

print('')And when you run it:

python3 scrapeIndeed.pyYou can tell that the code is isolating the v-cards HTML.

On further inspection, you can see that the title of the job always has the class title. So let's try and retrieve that...

# -*- coding: utf-8 -*-

from bs4 import BeautifulSoup

import requests

headers = {'User-Agent':'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_2) AppleWebKit/601.3.9 (KHTML, like Gecko) Version/9.0.2 Safari/601.3.9'}

url = 'https://www.indeed.com/jobs?q=web crawler'

response=requests.get(url,headers=headers)

#print(response.content)

soup=BeautifulSoup(response.content,'lxml')

for item in soup.select('.jobsearch-SerpJobCard'):

try:

print('----------------------------------------')

print(item.select('.title')[0].get_text().strip())

print('----------------------------------------')

except Exception as e:

#raise e

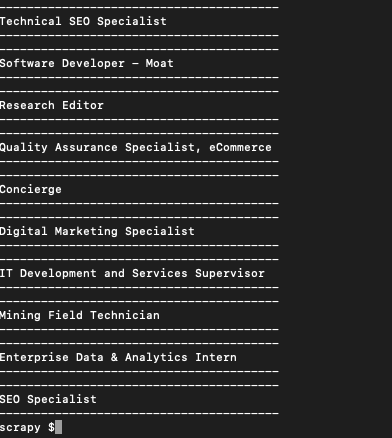

print('')That will get us the titles..

Bingo!

Now let's get the other data pieces..

# -*- coding: utf-8 -*-

from bs4 import BeautifulSoup

import requests

headers = {'User-Agent':'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_2) AppleWebKit/601.3.9 (KHTML, like Gecko) Version/9.0.2 Safari/601.3.9'}

url = 'https://www.indeed.com/jobs?q=web crawler'

response=requests.get(url,headers=headers)

#print(response.content)

soup=BeautifulSoup(response.content,'lxml')

for item in soup.select('.jobsearch-SerpJobCard'):

try:

print('----------------------------------------')

print(item.select('.title')[0].get_text().strip())

print(item.select('.company')[0].get_text().strip())

print(item.select('.location')[0].get_text().strip())

print(item.select('.summary')[0].get_text().strip())

print('----------------------------------------')

except Exception as e:

#raise e

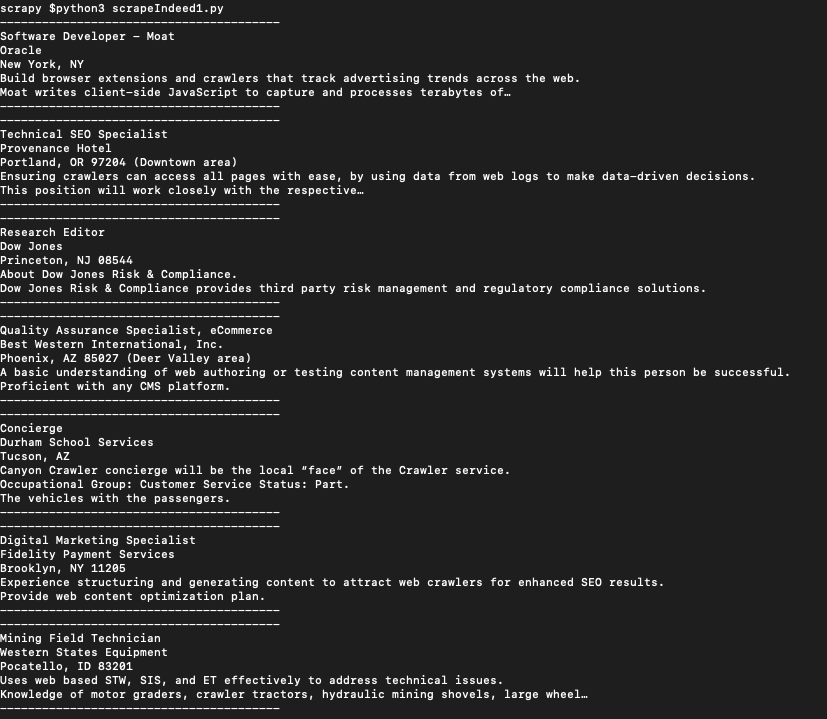

print('')And when run...

Produces all the info we need including company name, address, and summary of the job.

In more advanced implementations you will need to even rotate the User-Agent string so Indeed.com pages cant tell its the same browser!

If we get a little bit more advanced, you will realize that Indeed.com pages can simply block your IP ignoring all your other tricks. This is a bummer and this is where most web crawling projects fail.

Overcoming IP Blocks

Investing in a private rotating proxy service like Proxies API can most of the time make the difference between a successful and headache-free web scraping project which gets the job done consistently and one that never really works.

Plus with the 1000 free API calls running an offer, you have almost nothing to lose by using our rotating proxy and comparing notes. It only takes one line of integration to its hardly disruptive.

Our rotating proxy server Proxies API provides a simple API that can solve all IP Blocking problems instantly.

- With millions of high speed rotating proxies located all over the world,

- With our automatic IP rotation

- With our automatic User-Agent-String rotation (which simulates requests from different, valid web browsers and web browser versions)

- With our automatic CAPTCHA solving technology,

Hundreds of our customers have successfully solved the headache of IP blocks with a simple API.

The whole thing can be accessed by a simple API like below in any programming language.

curl "http://api.proxiesapi.com/?key=API_KEY&url=https://example.com"We have a running offer of 1000 API calls completely free. Register and get your free API Key here.