In the beginning stages of a web crawling project or when you have to scale it to only a few hundred requests, you might want a simple proxy rotator that uses the free proxy pools available on the internet to populate itself now and then.

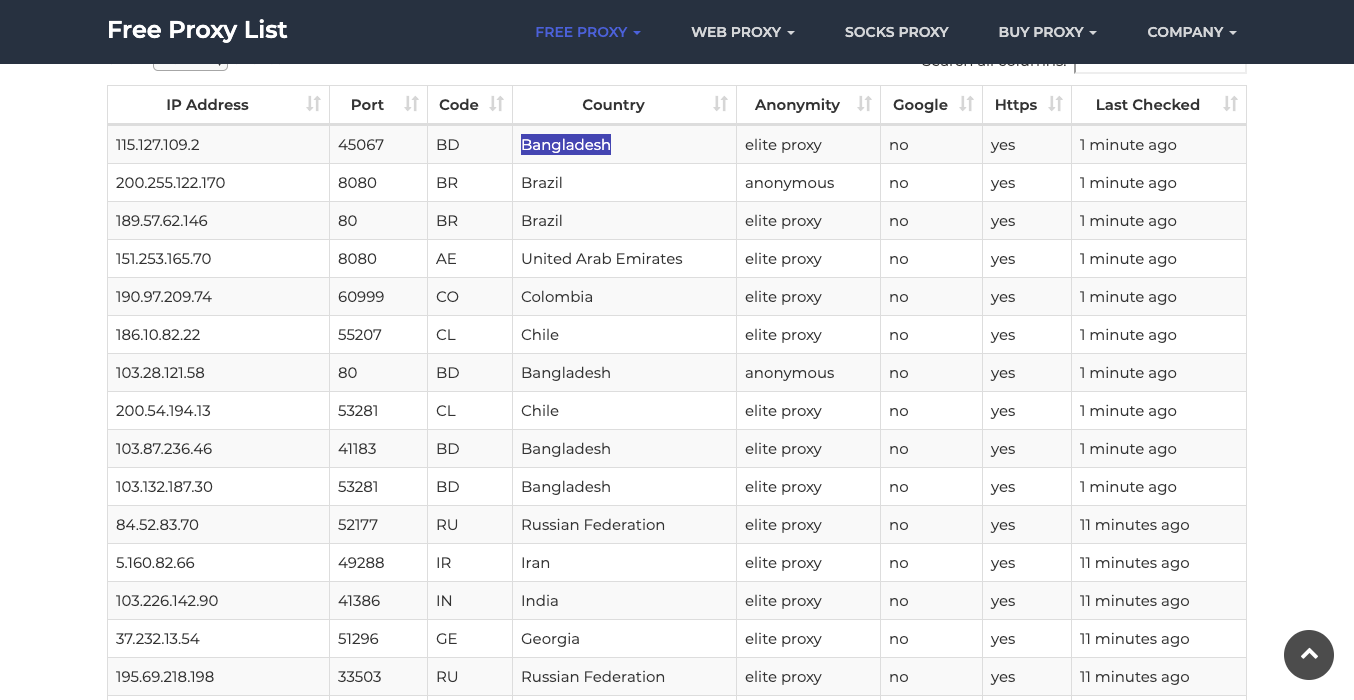

We can use a website like https://sslproxies.org/ to fetch public proxies every few minutes and use them in our projects.

Here is a simple tutorial that does that. This tutorial assumes you have beautiful soup installed.

Our basic fetch code looks like this.

# -*- coding: utf-8 -*-

from bs4 import BeautifulSoup

import requests

url='https://sslproxies.org/'

header = {

'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_2) AppleWebKit/601.3.9 (KHTML, like Gecko) Version/9.0.2 Safari/601.3.9'

}

response=requests.get(url,headers=header)

#print(response.content)

This will just get the HTML from sslproxies while using the User-Agent string to pretend to be a web browser.

The response.content object has the HTML.

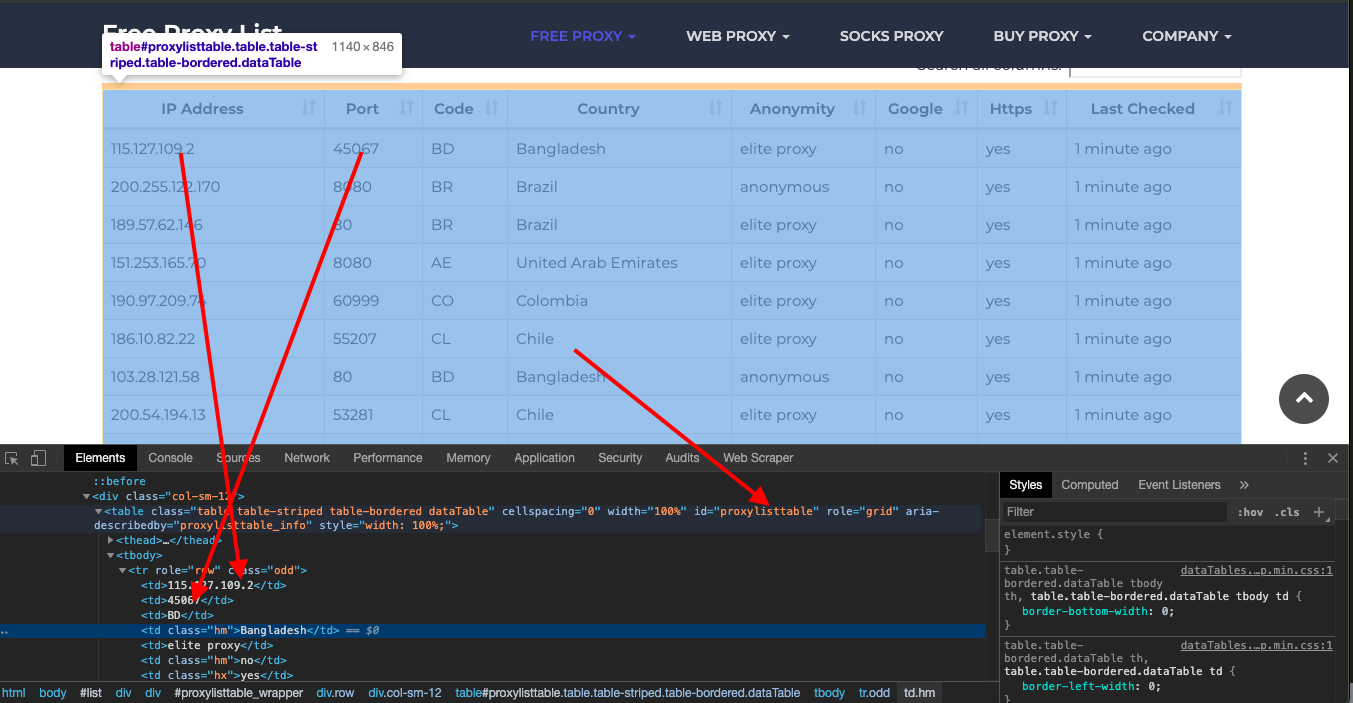

And if you check the HTML using the inspect tool, you will see the full content is encapsulated in a table with the id.

The IP and port are the first and second elements in each row.

We can use the following code to select the table and its rows to iterate on and further pull out the first and second elements of the elements.

for item in soup.select('#proxylisttable tr'):

try:

proxies.append({'ip': item.select('td')[0].get_text(), 'port': item.select('td')[1].get_text()})

except:

print('')Now, let's organize this as a proper function we can call to get a list of proxies whenever we want and store it into an array.

So.

# -*- coding: utf-8 -*-

from bs4 import BeautifulSoup

import requests

from random import randrange

proxies = []

def LoadUpProxies():

url='https://sslproxies.org/'

header = {

'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_2) AppleWebKit/601.3.9 (KHTML, like Gecko) Version/9.0.2 Safari/601.3.9'

}

response=requests.get(url,headers=header)

soup=BeautifulSoup(response.content, 'lxml')

for item in soup.select('#proxylisttable tr'):

try:

proxies.append({'ip': item.select('td')[0].get_text(), 'port': item.select('td')[1].get_text()})

except:

print('')

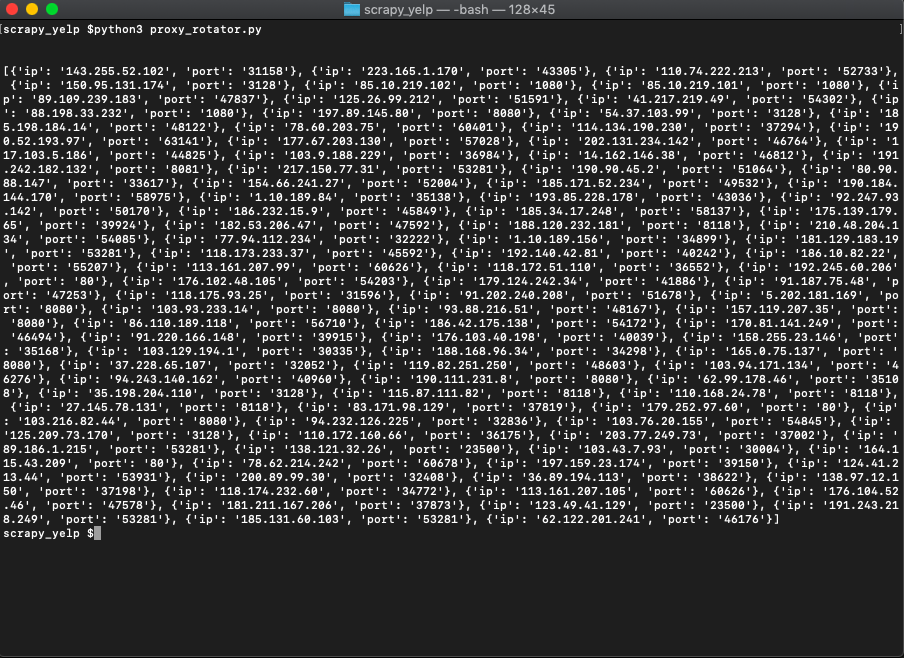

LoadUpProxies()

print(proxies)If you run it, it should print the results like so.

Now let's pull the data out of the array where the proxies are stored in a random manner using the function.

from random import randrange

randrange(10)Now putting it all together

# -*- coding: utf-8 -*-

from bs4 import BeautifulSoup

import requests

from random import randrange

proxies = []

def LoadUpProxies():

url='https://sslproxies.org/'

header = {

'User-Agent': 'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_2) AppleWebKit/601.3.9 (KHTML, like Gecko) Version/9.0.2 Safari/601.3.9'

}

response=requests.get(url,headers=header)

soup=BeautifulSoup(response.content, 'lxml')

for item in soup.select('#proxylisttable tr'):

try:

proxies.append({'ip': item.select('td')[0].get_text(), 'port': item.select('td')[1].get_text()})

except:

print('')

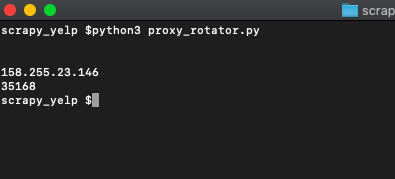

LoadUpProxies()

rnd=randrange(len(proxies))

randomIP=proxies[rnd]['ip']

randomPort=proxies[rnd]['port']

print(randomIP)

print(randomPort)That should give you a different IP every time you call it. You should call the LoadUpProxies function every few minutes apart, so it has the latest IPs.

If you want to use this in production and want to scale to thousands of links, then you will find that many free proxies won't hold up under the speed and reliability requirements. In this scenario, using a rotating proxy service to rotate IPs is almost a must.

Otherwise, you tend to get IP blocked a lot by automatic location, usage, and bot detection algorithms.

Our rotating proxy server Proxies API provides a simple API that can solve all IP Blocking problems instantly.

- With millions of high speed rotating proxies located all over the world

- With our automatic IP rotation

- With our automatic User-Agent-String rotation (which simulates requests from different, valid web browsers and web browser versions)

- With our automatic CAPTCHA solving technology

Hundreds of our customers have successfully solved the headache of IP blocks with a simple API.

A simple API can access the whole thing like below in any programming language.

curl "http://api.proxiesapi.com/?key=API_KEY&url=https://example.com"We have a running offer of 1000 API calls completely free. Register and get your free API Key here.